|

|

The Active Appearance Models (AAMs) and the Active Appearance algorithm, invented and developed by Gareth Edwards at the University of Manchester (now at Image Metrics), are powerful tools for image analysis. We here use a modified version for tracking a face and its facial features in a video sequence.

The Active Appearance algorithm can be used to refine an rough initial estimate of the position, size and orientation of a face in an image, and also extract positions of facial features (moth corners, eyebrows, ...). The colour based face candidate finder currently included in the InterFace LLVA library is intended to give the needed initial estimate of the face location, size and in-plane rotation (see example).

The parameters extracted by the Active Appearance algorithm are (currently): 3D rotation, 2D translation, scale, and six Action Units (controlling the mouth and the eyebrows). The Active Appearance algorithm is iterative, and the current implementation needs slightly less than 5 ms per iteration and parameter (>50 ms for all 12 parameters) on a PC with a 500 MHz Pentium III processor.

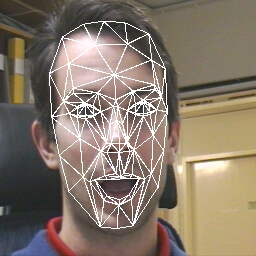

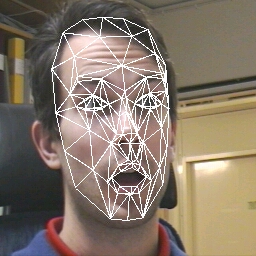

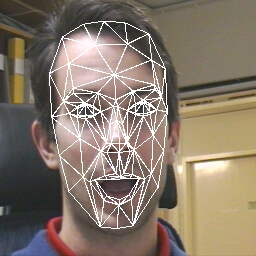

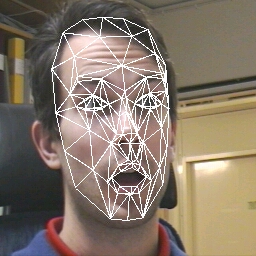

The Active Appearance algorithm can also be used for tracking, by simply letting the adaptation in one frame be the initial estimate for the following frame. This should be combined with some kind of fast motion estimattion (in a few, few points) and/or Kalman filtering, thus improving robustness and/or speed (the current implementation is too slow, needing at least 50ms for each frame).

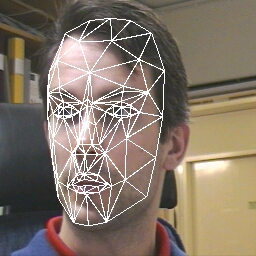

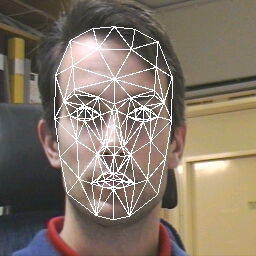

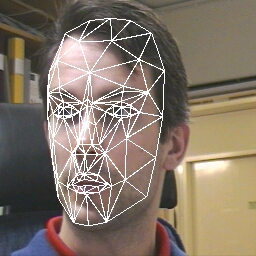

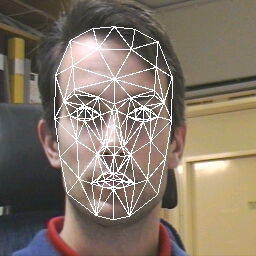

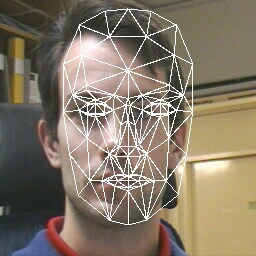

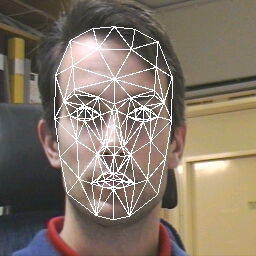

To try out the scheme, a sequence of 169 frames has been recorded. In the first frame, the face model (Candide-3) has manually been placed in the middle of the frame, as shown below (left), and the Active Appearance algorithm did then refine the estimate as shown below to the right. The refined estimate from the first frame is used as the initial estimate for the second frame, and so on.

The entire image sequence (with the face model drawn on the frames) can be downloaded as an MPEG video file (3.89 MB). The face model parameters have also been converted to an MPEG-4 FAP file (in ASCII-format, binary version upcoming). If you don't have an MPEG-4 Face Animation player, you can get Facial Animation Engine from the University of Genova. You can also watch the animation result as a AVI video file, created by Stephane Garchery at MIRALab, University of Geneva.

The results are not perfect yet, but there are several possible ways to improve the scheme.

| Initial suggestion | Refined estimate |

|---|---|

|

|

| Last modified: Wed Feb 2 13:56:02 2001 |